Research Projects

Image Source: Magic Studio

Open-Ended Evolution with Large Populations via Parallel Connection Machines (Singular S1 Boards)

This research project explores methods for driving open-ended evolution (OEE) using a network of Singular S1 connection machines. By leveraging the computational power of these interconnected devices, the project seeks to simulate evolving populations of unprecedented size. The core hypothesis is that increased population sizes, combined with complex and dynamic environments, will drive the emergence of open-ended evolution. This approach allows for the exploration of evolutionary dynamics that more closely mimic the vast scale and complexity of biological systems. By utilizing the Singular S1 boards, the project aims to create a computational ecosystem where information can flow and evolve across the network, potentially leading to the continuous generation of novelty and increasing complexity over time.

Team Members: Erik Hemberg, Steven Jorgensen

Collaborators: Joe Bates

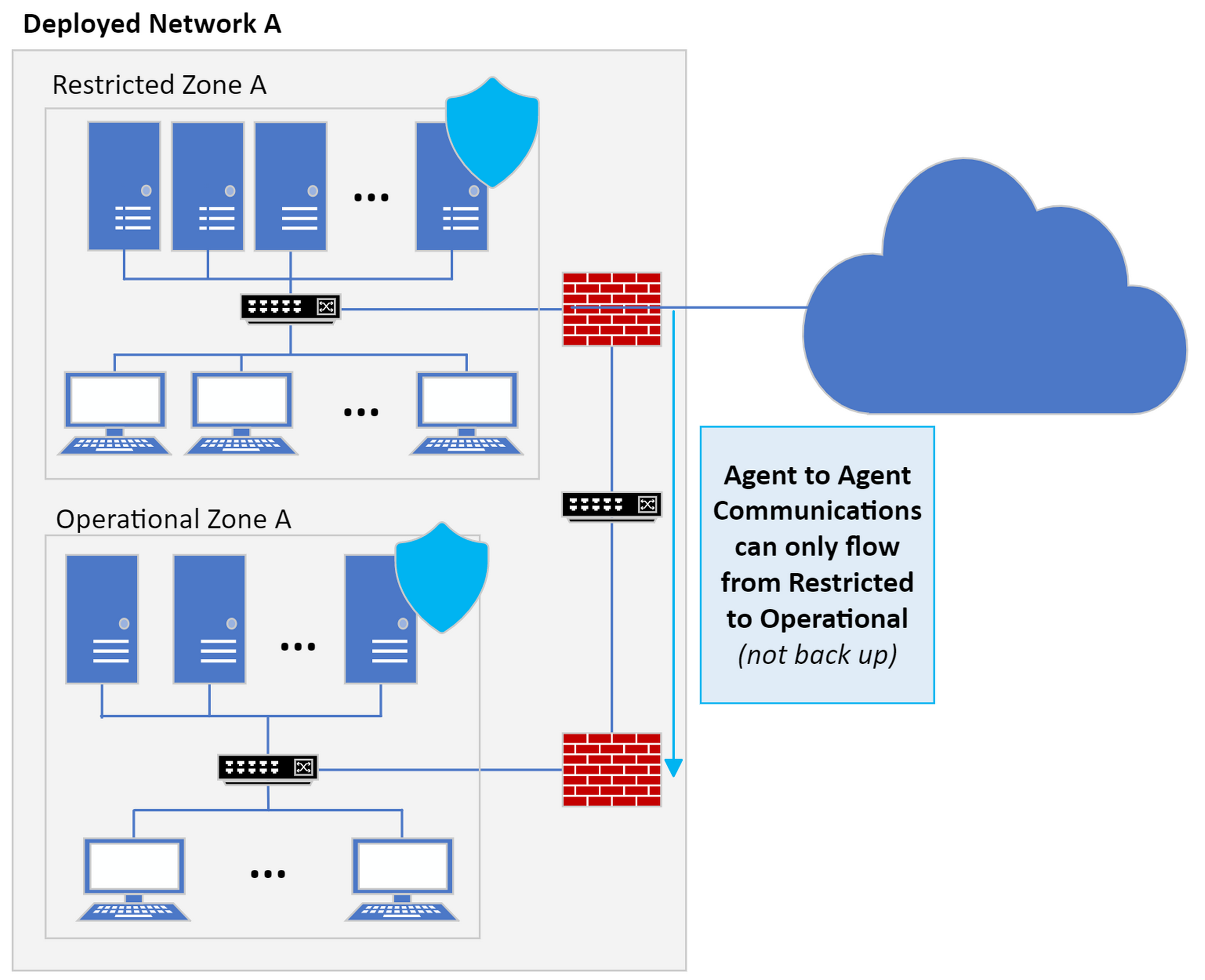

Image Source: TTCP Cage Challenge

Leveraging Capabilities of LLMs for Autonomous Cybersecurity Agents

This research project investigates the use of large language models (LLMs) as intelligent controllers for red and blue cybersecurity agents in simulated environments. In particular, the goal is to assess the capabilities of LLMs in identifying vulnerabilities, selecting penetration testing tools, and executing automated cyber campaigns on behalf of red agents, while blue agents defend and maintain system integrity. The study is conducted using the CybORG platform, which simulates real-world cybersecurity scenarios and allows agents to make decisions based on network system observations at each step.

The research is structured around three main objectives. The first is to explore the feasibility of using LLMs as full-fledged agent controllers, where the model takes as input current observations in the simulation then determines the next course of action. The second objective is to assess whether LLMs can generate code that replicates their decision-making behavior, and then compare the performance of this generated code with the directly controlled LLM agents. Finally, the third objective aims to evaluate the transferability of the agents and whether or not they can robustly adapt in adversarial cyber environments which entail new scenarios, different network infrastructures, and unseen adversaries.

Team Members: Eric Liu, Erik Hemberg

Collaborators: Ally Sansone

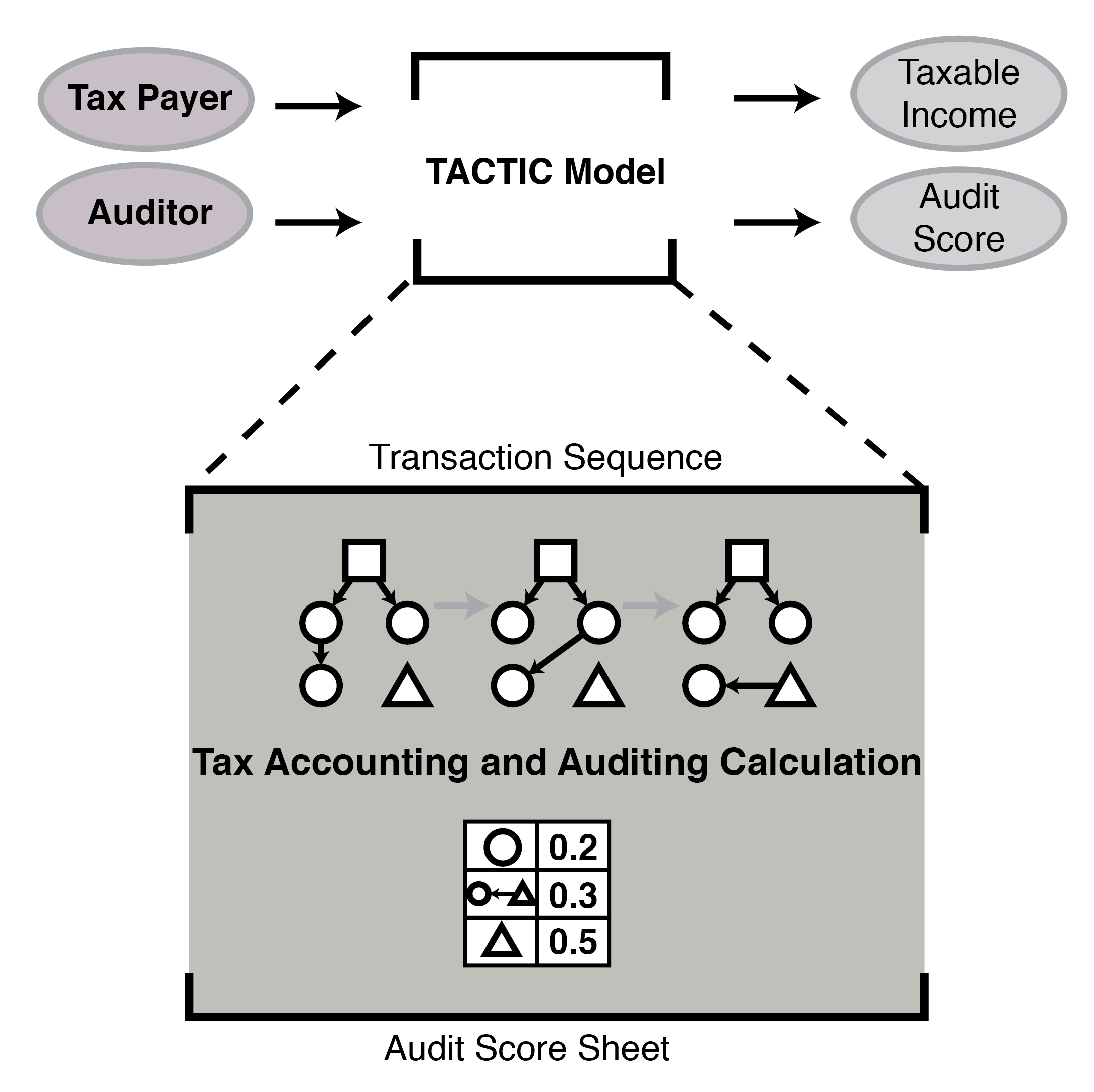

Detecting and Auditing Tax Avoidance Through Coevolution with LLM Agents

This research explores how AI can help detect emerging tax avoidance strategies using a coevolutionary framework. Tax avoidance schemes exploit legal loopholes to minimize tax liabilities, making it difficult for auditors to keep up as strategies evolve. Two Large Language Model (LLM)-based agents are used: a transaction agent that generates tax-compliant transactions designed to minimize liabilities and an audit agent that learns to spot suspicious patterns. These agents evolve together, mimicking the real-world back-and-forth between tax planners and auditors. This setup not only uncovers new tax strategies but also helps improve audit detection methods over time. By combining natural language processing and evolutionary techniques, this work provides a new way to study how tax avoidance schemes emerge and how audits can become more effective at detecting them

Team Members: Joy Bhattacharya, Erik Hemberg

Collaborators: Sofia Ocampo Quinche, Andres Felipe Leguizamon Lopez, Carlos David Sánchez Niño, Germán Camilo

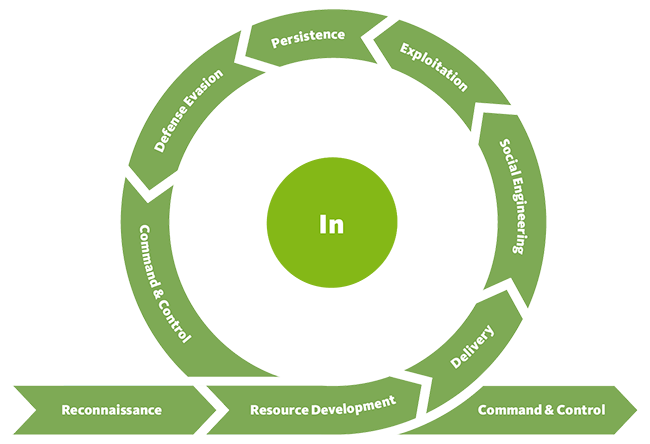

Image Source: Unified Kill Chain

AI-Supported Discovery of Privilege Escalation and Remote Exploit Chains

By means of generative AI and AI planning, this cybersecurity project develops a method for discovering chains of known Privilege Escalation (PE) and Remote exploits in a cyber network. Chains of these two types of exploits enable lateral movement within a network from one compromised host to another. They also enable a technique known as pivoting where an attacker circumvents network segmentation or internal firewalls to move deeper into a network. After gaining initial access to a host on the network, pivoting allows an attacker to access other host on the network that would otherwise be unavailable.

Team Members: Erik Hemberg, Andrea Vignali, Christián Colón, Miguel Tulla

Collaborators: Giancarlo Sperli, Simon Pietro Romano, Masataro Asai

LLM-Supported Natural Language to Bash Translation

The Bash command line interface has complex syntax and requires extensive specialized knowledge. This research project explores the capabilities of Large Language Models (LLMs) for translating natural language to Bash commands (NL2SH). The goal of the project is to find reliable methods for improving NL2SH translation accuracy. Thus far, we developed new NL2SH datasets and models, as well as a novel benchmark to evaluate model performance. Current findings suggest that in-context learning, in-weight learning, constrained decoding, and parsing can significantly improve the performance of LLMs for NL2SH tasks.

Team Members: Finn Westenfelder, Erik Hemberg, Miguel Tulla, Stephen Moskal

Collaborators: Silviu Chiricescu

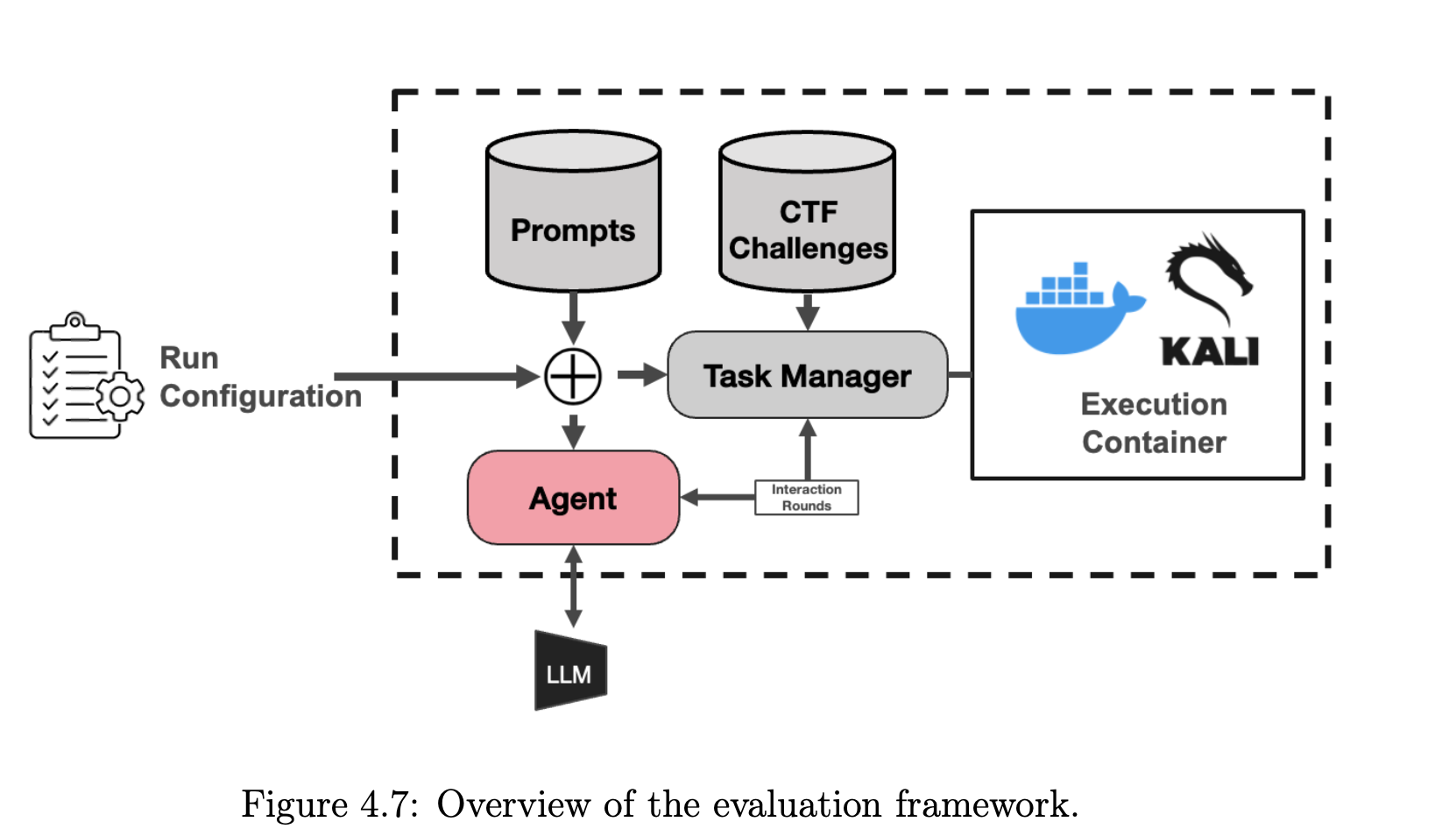

Large Language Models for Automatic Pen-Testing

This project explores using Large Language Models (LLMs) to automate penetration tests and Cyber Capture the Flag (CTF) challenges, bridging the gap between static tools and human intuition in cybersecurity. Key questions include: How can LLMs be fine-tuned for complex tasks like forensic analysis? How can their robustness and adaptability be enhanced? Will ensemble approaches improve performance across various CTF challenges? And what strategies can mitigate LLM limitations in complex cybersecurity scenarios?

Team Members: Sam Laney, Erik Hemberg, Stephen Moskal

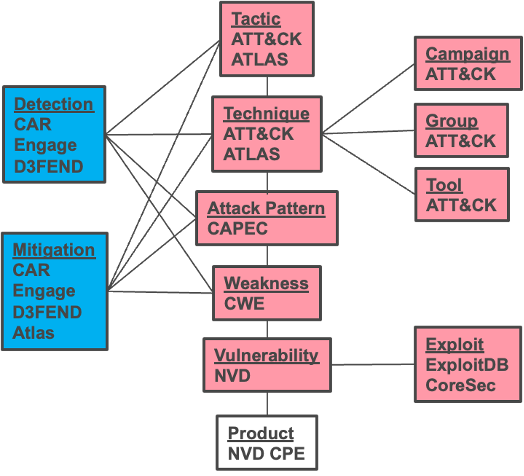

BRON

Many public sources of cyber threat and vulnerability information exist to help defend cyber systems. BRON ~(the bridge in Swedish) is a property graph that contains threat data from MITRE ATT&CK, CAPEC, CWE , CVE, MITRE Engage, MITRE D3FEND, MITRE CAR, exploitDB and MITRE ATLAS data sources are linked together. The data types are linked with bidirectional edges. BRON preserves all entries and relations of the sources, while enabling bi-directional, relational path tracing within an aggregate data graph.

BRON supports cyber hunting and other cyber security related tasks. It relieves investigators of long, hard to track forays into these knowledge source by its unification of them, Additionally BRON supports AI tools, tasks, and research, It has been used for RAG. It has been used by autonomous AI agents, Because it has been crawled and integrated into many Large Language Models, it can be queried in an inferential mode. It also supports instruction-based and reasoning foundation model fine tuning by functioning as a source of inquiries.

Team Members: Erik Hemberg

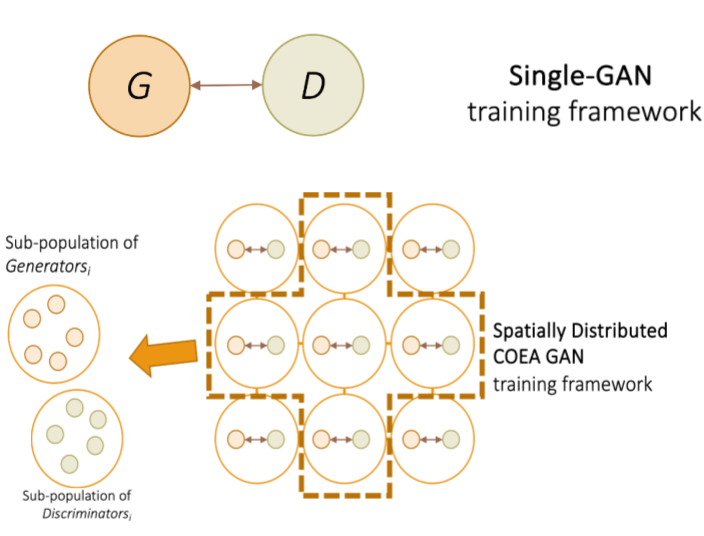

Lipizzaner

Artificial Neural Network (ANN) training can be a complex process. Lipizzaner provides a framework that combines gradient-based optimization with coevolutionary techniques to train ANNs. It combines the advantages of both methods to achieve fast and stable results. To reduce computational costs, Lipizzaner employs a spatial topology. The framework has two main applications: Lipizzaner-GAN, which uses competitive coevolution and stochastic gradient descent to train a population of generative artificial networks, and Lipizzaner-AE, which applies cooperative coevolution and stochastic gradient descent to train a population of Autoencoders.

Team Members: Erik Hemberg, Steven Jorgensen

Collaborators: Jamal Toutouh